AI is reshaping our world. Yet, many companies struggle with high costs, slow performance, and complexity in AI development.

Combining Golang’s speed and simplicity with large language models’ human-like language understanding and smart automation offers a promising way to cut development time and lower expenses.

Read on to discover how this powerful mix can drive scalable, efficient AI innovation for your business.

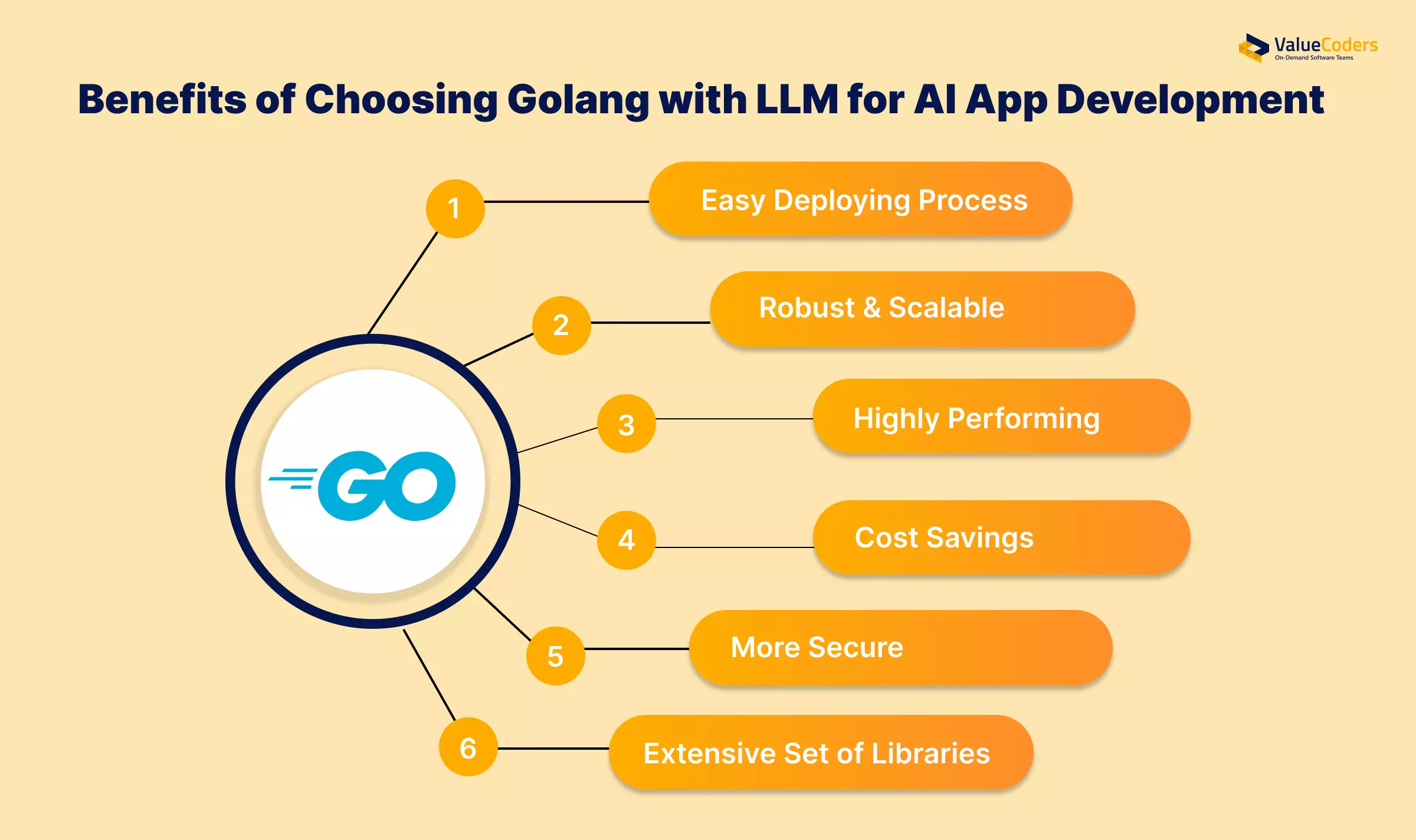

Why Golang with LLMs is the Preferred Choice for Businesses

Golang for AI development is an efficient programming language that meets large language model requirements well. You can hire dedicated Golang developers in India to build high-performance AI applications using GO with LLMs.

Here is why Golang is the preferred choice for AI applications:

Easy Deploying Process

Golang for AI simplifies deployment by producing statically linked binaries that run on multiple platforms without additional dependencies. This makes it easier to deploy LLM-based applications on devices like

- Servers

- Edge systems

- Cloud environments

Minimal runtime requirements also reduce setup complexity and improve deployment speed. These features allow businesses to deploy robust applications while keeping resource usage low.

Robust & Scalable

Golang is a strong choice for building applications that must grow with demand. Its support for microservices allows developers to break down functionalities into smaller, manageable components where each element can operate and expand independently, such as

- Sentiment analysis

- Recommendation engines

Go also integrates seamlessly with tools like Kubernetes and Docker, simplifying deployment in containerized environments. For example, a content recommendation system can use separate microservices to handle user preferences and scale them as needed.

Highly Performing

Golang is suitable for developing server-side apps and provides high performance. It includes computational workloads. Its compiled structure ensures rapid execution, making it an excellent choice for applications that use LLM development services.

With features like garbage collection and a low memory footprint, Go supports LLM-based applications that require quick processing and minimal overhead. These attributes make it ideal for distributed systems and serverless deployments.

Cost Savings

Due to its clean syntax and standard library, Golang for AI supports faster coding and reduced development time. These features help developers build applications quickly, reducing the time needed to bring products to market.

Its lightweight nature also helps conserve computational resources, reducing infrastructure costs. Go’s concurrency model allows applications to manage multiple tasks efficiently, avoiding overspending on extra hardware or services.

More Secure

Go prioritizes secure application development with features like a strong type system and memory safety. These protections help prevent issues such as memory leaks and buffer overflows, reducing vulnerabilities that attackers could exploit.

The language encourages secure coding practices, such as input validation and error handling, to minimize risks like injection attacks. Additionally, Go’s built-in dependency management tools help ensure that third-party libraries do not introduce unnecessary risks.

Extensive Set of Libraries

Golang for AI offers a rich library and framework ecosystem that enhances its ability to build AI applications. Libraries such as Echo and Gin make setting up RESTful APIs, middleware, and other essential tools easy.

- Gonum and Go-Torch enable advanced computations

- GoNLP and spaCY help with sentiment analysis and text classification

Go supports integration with popular frameworks like PyTorch and TensorFlow for machine learning and natural language processing tasks.

We use Golang’s concurrency and LLM’s intelligence to create high-speed, reliable AI-driven solutions.

Top Challenges in Golang-LLM Integration

While Go and LLM together are a powerful combination for AI development, some specific issues that occur while integrating them are listed below:

- Dependency on External Models: LLMs often rely on pre-trained models that may require third-party tools or APIs. This ultimately increases dependencies.

- GPU Integration: Using GPUs for LLM training inference with the Go language can be complex due to limited native support.

- Debugging Complex Systems: Troubleshooting applications that combine the concurrency model of Go with operations in LLM can be challenging.

- Data Management: For the smooth flow of operations, you must ensure that input data is formatted correctly and outputs are processed efficiently

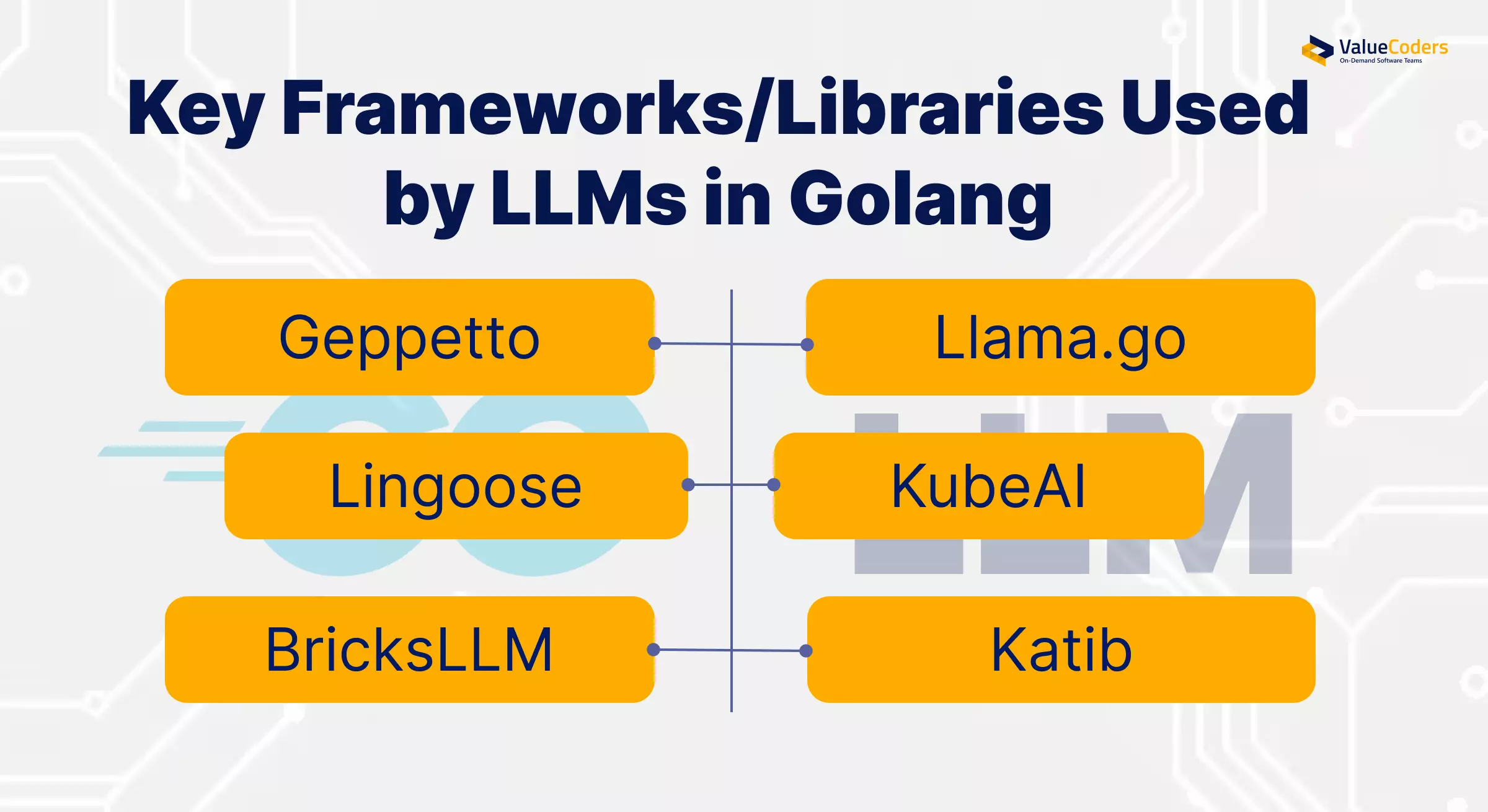

Top Libraries and Frameworks for Go-LLM Integration

Several powerful libraries and Golang AI frameworks help integrate Large Language Models (LLMs) with Golang for AI development. These enable seamless processing of text, automation, and AI-driven applications.

- Geppetto: Helps build applications with declarative chains for complex language tasks.

- Llama.go: A Go implementation for running LLMs locally, suitable for experimentation and production.

- BricksLLM: Offers monitoring tools, access control, and support for open-source and commercial LLM in AI applications.

- Lingoose: Provides a flexible framework for creating LLM-powered applications with minimal effort.

- KubeAI: Optimized for Kubernetes environments, streamlining LLM deployment in containerized setups.

- Katib: Focuses on automating machine learning workflows, including hyperparameter tuning for LLMs.

Also read: Choosing The Right LLM: What Your Business Needs

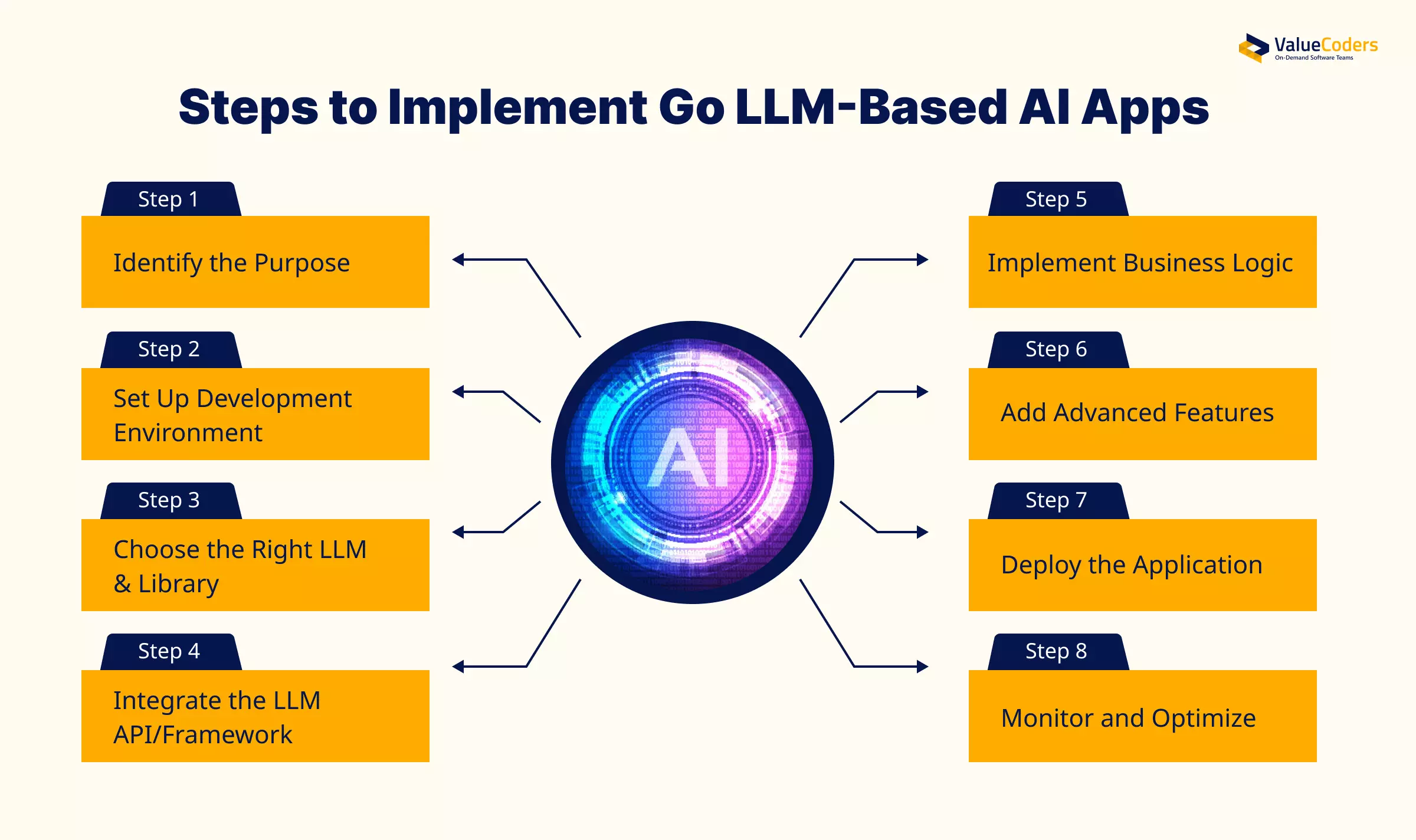

8 Steps to Build a Golang LLM-Based AI Application

An AI services company develops AI applications powered by LLMs using the Go programming language. The developers use the following steps to simplify the integration process:

Step 1: Identify the Purpose

Defining your purpose before starting the development process of integrating Go and LLM-based applications is essential. For this, you need to clearly define the use cases and make sure to use the right LLM models/supporting tools.

You must determine the areas for which you need chatbots, as listed below:

- Document summarization

- Customer service

- Sentiment analysis

- Text classification

- Content generation

Step 2: Set Up Development Environment

After identifying the purpose of this development or integration, you must first install GO in your system and try to use its latest version. To set up this development environment, you can follow these steps:

- Download the latest version of Go from Golang.org

- Configure your environment variables like GOROOT, GOPATH

- Utilize a package manager like GoMod

Step 3: Choose the Right LLM & Library

The third step is to choose the appropriate large language model and libraries for your app. This involves:

- Libraries like Geppetto (for chaining prompts), Llama.go (for running models locally)

- Use OpenAI Go SDK for models like GPT-4

- Integrate Hugging face models using gRPC endpoints or Go-based APIs

Step 4: Integrate the LLM API/Framework

You can start this by setting up your Golang LLM app with API keys (OpenAI, store keys in.env or configuration files) and add the necessary Go packages.

For Example:

package main

import (

“context”

“fmt”

“log”

“github.com/sashabaranov/go-openai”

)

func main() {

// Initialize OpenAI client with your API key

client := openai.NewClient(“YOUR_API_KEY”)

// Create a chat completion request

request := openai.ChatCompletionRequest{

Model: openai.GPT4, // Specify the model you want to use

Messages: []openai.ChatCompletionMessage{

{Role: openai.ChatMessageRoleUser, Content: “Explain the benefits of using Golang for AI development.”},

},

}

// Send the request and capture the response

response, err := client.CreateChatCompletion(context.Background(), request)

if err != nil {

log.Fatalf(“Error creating chat completion: %v”, err)

}

// Print the response message content

fmt.Println(“Response from LLM:”)

fmt.Println(response.Choices[0].Message.Content)

}

How to Run?

Now, execute the following steps to run the above program. If it seems to technical to do perform on your own, consider hiring top LLM developers:

1. Replace “YOUR_API_KEY” with your actual OpenAI API key.

2. Install the go-openai package using:

go get github.com/sashabaranov/go-openai

3. Run the program:

go run main.go

This code sends a prompt to the OpenAI GPT-4 model and prints the response. You can modify the message content or model as needed.

Golang’s lightweight framework and LLM’s adaptability ensure seamless AI scalability.

Step 5: Implement Business Logic

It’s the right time to build your app’s core functionality. You can do so by using AI consulting services and modular services like:

- Input pre-processing

- Prompt generation

- Output formatting

Moreover, employing concurrency features will help you manage multiple user requests efficiently. It also integrates APIs for tasks like data processing and retrieval.

Step 6: Add Advanced Features

You can enhance your application with additional capabilities, such as:

- User interactions

- Analytics

- Custom models

- Analyze model performance

Step 7: Deploy the Application

It is time to deploy your Go-based LLM app for the market launch. You can release it by adding Docker to your package for consistent deployment and hosting it on Google Cloud, AWS, and Azure with Kubernetes for scaling.

Furthermore, you can also opt for Google Cloud Functions for cost efficiency.

Step 8: Monitor and Optimize

You must continuously monitor and improve your application by using tools like Grafana and Prometheus to monitor and optimize prompts, as well as API calls to decrease latency and regularly update models and libraries for the latest advancement.

Also read: How Enterprises Can Leverage Large Language Models?

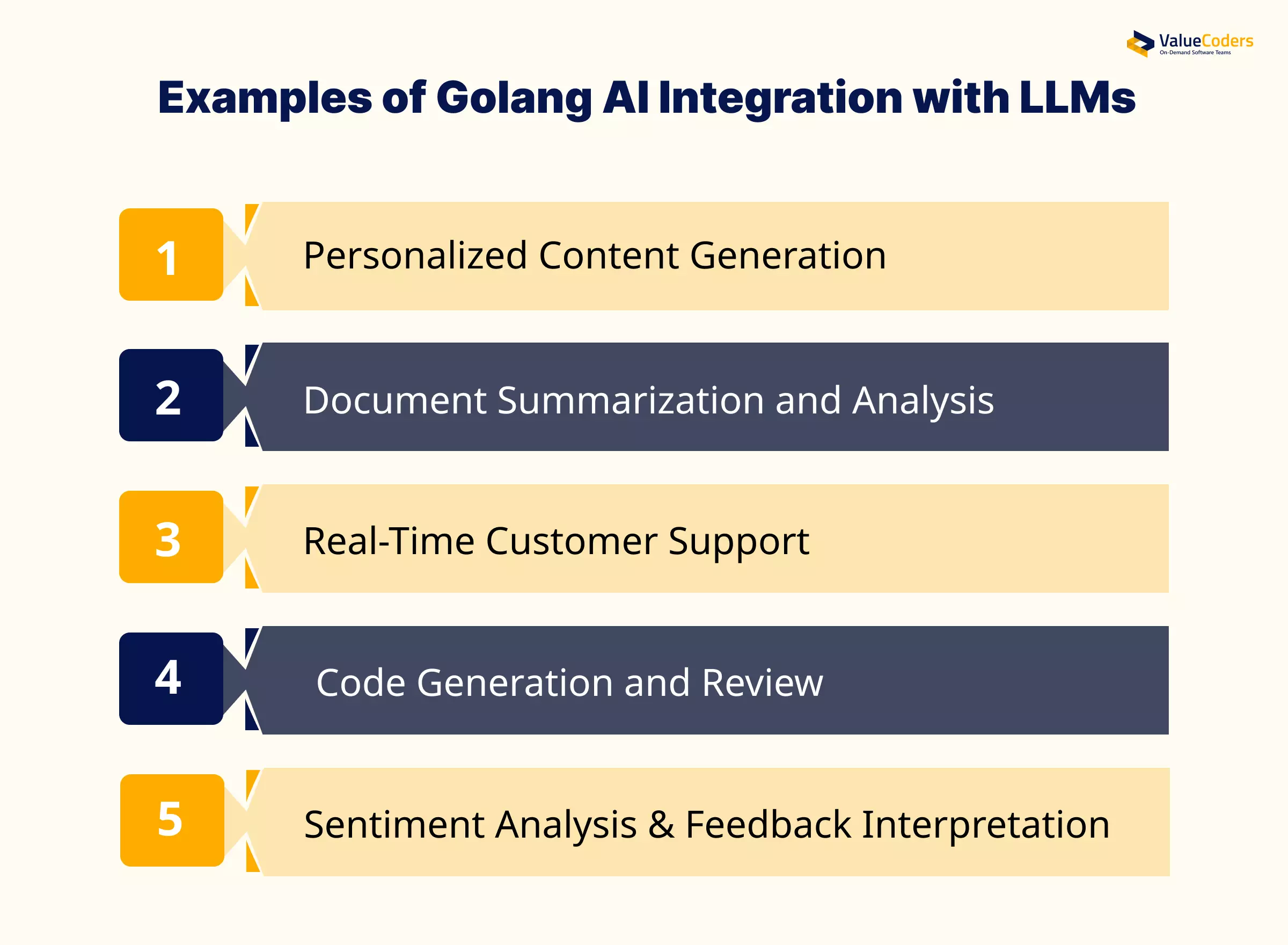

Real-World Applications of Golang-LLM Integration

Several enterprises use Golang and LLMs to build advanced AI applications, from chatbots to automated content analysis. Let’s explore some of the famous examples of Golang AI integration using LLMs:

Personalized Content Generation

Go-based applications powered by LLMs can generate emails, blog posts, and product descriptions based on user preferences. It’s key features include:

- Integration with other LLM APIs through Go’s HTTP clients and JSON handling

- Context-aware content creation using user preferences and historical data stored in Go structures

- Rate limiting and request optimization using Go’s concurrency features like goroutines and channels

- Template-based generation with Go’s text/template package combined with LLM outputs

- Error handling and fallback mechanisms for reliable content delivery

Document Summarization and Analysis

Enterprises use these tools to condense lengthy documents, making it easier to process information quickly. It’s key features include:

- PDF and document parsing using Go libraries like pdfcpu or UniDoc

- Chunking large documents into processable segments using Go’s string manipulation

- Parallel processing of document sections using Go’s concurrent features

- Metadata extraction and organization using Go structs

- Integration with vector databases like Milvus or Weaviate for semantic search capabilities

Real-Time Customer Support

Golang and LLM-based chatbots handle thousands of customer queries simultaneously, improving response times. It’s key features include:

- WebSocket implementations for live chat functionality using gorilla/websocket

- Queue management for incoming requests using Go channels

- Context preservation across conversations using Go’s context package

- Integration with existing customer databases and CRM systems

- Automated response generation with failover to human support

- Response caching and optimization using Go’s sync package

Code Generation and Review

Developers rely on LLMs to automate repetitive coding tasks and review code for potential improvements. It’s key features include:

- Abstract Syntax Tree (AST) parsing using Go’s built-in parser package

- Integration with version control systems using Go-git

- Pattern matching and code analysis using regular expressions

- Concurrent processing of multiple code files

- Static code analysis integration with LLM suggestions

- Custom rule enforcement and style checking

Sentiment Analysis & Feedback Interpretation

Go applications analyze customer reviews and social media comments to gauge public sentiment and identify areas of improvement. It’s key features include:

- Batch processing of feedback data using Go’s concurrent features

- Integration with SQL databases for storing and analyzing feedback trends

- Real-time sentiment scoring using Go’s efficient processing

- Statistical analysis using Go’s math packages

- Custom categorization and tagging systems

- Aggregation of sentiment data across multiple sources

We use Golang’s efficiency and LLM’s power to accelerate AI computations without lag.

Future of Golang and LLM in AI Applications

The combination of Go and LLM will play a vital role in shaping the future of AI. Go’s efficiency and LLM’s capabilities will support advancements in real-time applications, IoT integration, and on-device AI.

With growing concerns about data security, Go’s built-in safety features make it an ideal choice for AI projects that handle sensitive information. Furthermore, as industries demand smarter applications, this partnership will continue driving innovation in AI.

Also read: Top 10 LLM Development Companies In 2025

Conclusion

Golang and LLM together are redefining modern AI development by providing a unique combination of scalability, speed and efficiency. By utilizing Golang’s concurrency model and LLM’s advanced language capabilities, businesses can develop intelligent apps.

Whether for content creation, customer support, or analytics, this duo is all set to shape the future of AI-powered solutions.

Bring your AI vision to life with ValueCoders. Our software teams ensure a seamless transition from concept to deployment.

We specialize in integrating Golang and LLM with AI to build high-performance apps. Our approach includes custom AI development, continuous optimization, scalable architecture, and seamless integration.