In 2023, a shocking incident saw hackers using AI-generated Deepfakes to bypass a significant bank’s facial recognition security. This led to a multimillion-dollar heist.

This event highlighted the rapid rise of deepfake technology and its potential for misuse.

Deepfakes, which use AI to create hyper-realistic fake images and videos, are becoming increasingly difficult to detect. As these forgeries advance, they pose an alarming threat to the systems designed to keep us secure, such as facial biometric authentication.

Join us as we explore how AI Deepfakes reduce the reliability of facial recognition technology, focus on major Deepfake biometrics threats, and how they adversely affect the future of digital security.

An Overview of AI Deepfakes

AI Deepfakes are defined as highly realistic but artificially created media, generated using advanced machine learning techniques.

They are produced using Generative Adversarial Networks (GANs), which pit two neural networks against each other to create convincing fake images, videos, or audio that mimic real people.

Let’s examine the different types of Deepfakes used to spread Deepfake biometrics threats:

1. Video Deepfakes: This involves manipulating video content to alter a person’s appearance, expressions, or movements.

2. Audio Deepfakes: Using AI, audio Deepfakes can mimic someone’s voice, creating fake speeches or conversations.

3. Image-Based Deepfakes: These Deepfakes are static images where facial features are altered or replaced with another person’s likeness. Facial Deepfakes are particularly concerning because they can be used to bypass facial biometric systems.

Fortify your security with our advanced biometric solutions.

How AI Deepfakes Work?

The creation of Deepfakes begins with gathering extensive data, such as images or video footage, of the target people/individuals. This data is fed into GANs, where one network generates the fake content while the other evaluates its authenticity.

Through repeated iterations, the system refines the fake until it becomes nearly indistinguishable from actual footage. This sophisticated process allows for the creation of Deepfakes that can deceive even trained observers.

How Facial Biometric Authentication Works?

Facial recognition systems capture an image or video of an individual’s face and convert it into a digital format. The system then extracts unique features, including the distance between the eyes, the contour of the cheekbones, and the shape of the jawline, through feature extraction.

These features are then converted into a mathematical representation or facial signature, matched against stored templates in the system’s database using sophisticated matching algorithms.

If the captured signature aligns with a template, the system grants access or confirms identity.

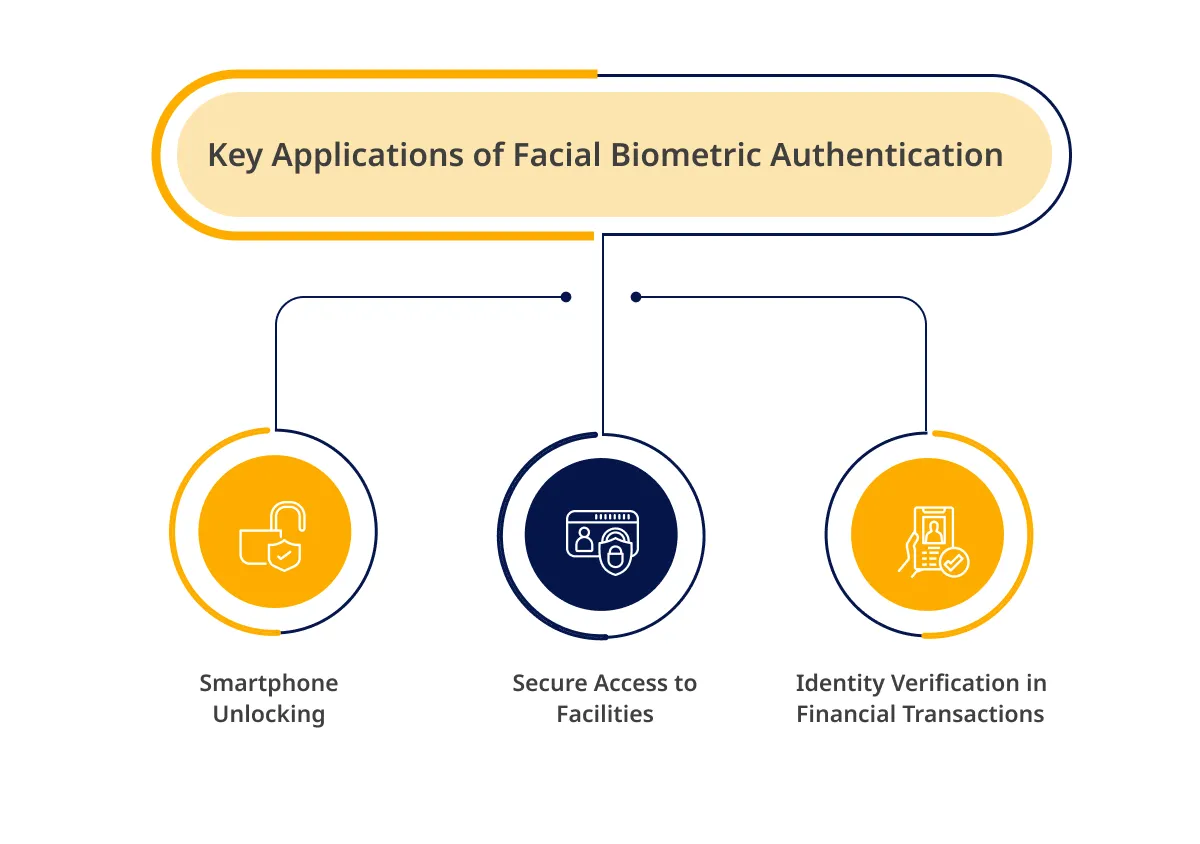

Applications of Facial Biometric Authentication

Building secure applications is the need of this hour. Have a look at the key applications of facial biometric authentication below:

- Smartphone Unlocking: Many modern smartphones use facial recognition to unlock the device, offering a quick and secure access method.

- Secure Access to Facilities: Facial biometric systems control access to secure areas, ensuring only authorized personnel can enter.

- Identity Verification in Financial Transactions: Banks and financial institutions use facial recognition to verify identities in online transactions, enhancing security in digital banking and payment systems via fintech software development.

Upgrade your facial recognition systems for enhanced security.

Security Strengths and Weaknesses

Facial recognition systems come with both strengths and weaknesses. Have a look at these below:

Strengths include

- Convenience: Facial recognition provides a fast, hands-free way to authenticate identity.

- Non-Intrusiveness: The process is seamless and does not require physical contact or additional effort from the user.

Weaknesses include

- Susceptibility to Spoofing: Despite their advantages, facial biometric systems can be vulnerable to spoofing attacks, in which attackers use photos, videos, or Deepfakes to fool the system.

- False Positives/Negatives: The accuracy of facial recognition can sometimes be compromised, leading to potential Deepfake security risks.

Also read: What Is Deepfake AI And Its Potential

The Threat of AI Deepfakes to Facial Biometrics

“Deepfakes pose a clear challenge to the public, national security, law enforcement, financial and societal domains. With the advancement in deepfake technology, it can be used for personal gains by victimizing the general public and companies.”

– Forbes

Facial recognition systems, widely used for security and authentication, rely entirely on unique facial features to verify a person’s identity.

However, the rise of Deepfake technology poses a significant Deepfake biometrics threat to these systems. AI Deepfakes usually generate highly realistic fake faces that mimic a target individual’s exact facial features, expressions, and even subtle movements.

Using advanced machine learning solutions like GANs, AI Deepfakes creators produce fake videos or images nearly indistinguishable from real ones.

When these counterfeit images or videos are presented to a facial recognition system, the system cannot differentiate between the genuine and the fake, thus leading to false identifications or Deepfake biometrics threats.

Hence, bad actors can exploit this vulnerability to bypass security measures, gain unauthorized access, or impersonate someone else, representing a critical weakness in relying solely on facial biometrics for authentication.

List of Deepfake Biometrics Threats

As Deepfake technology advances, the AI Deepfake risks associated with its misuse will likely increase. This makes it imperative for organizations to explore additional layers of security beyond facial recognition alone.

The following Deepfakes AI Use Cases show the growing smoothness of AI Deepfakes and their potential to undermine the reliability of facial biometric systems and lead to the Deepfake Authentication threat.

1. Phone Unlocking Exploit: Researchers created a Deepfake video of a smartphone owner’s face and successfully used it to unlock the phone. The Deepfake tricked the facial recognition system into believing it interacted with a legitimate user, revealing a critical vulnerability in mobile security.

2. Corporate Espionage Test: An AI services company conducted an experiment where Deepfake videos of AI solutions for IT executives were used to gain unauthorized access to secure areas within a corporate office. The experiment demonstrated how Deepfakes could be used for espionage or to breach sensitive areas. environments.

3. Banking System Breach: In another incident, a Deepfake was used to impersonate a high-ranking executive during a video verification process for a financial transaction. The Deepfake convinced the facial recognition software that the person in the video was legitimate, enabling the transfer of significant funds.

4. Political Deepfake Attack: During a political campaign, Deepfakes were used to create fake videos of a candidate saying things they never actually said. Although these were not aimed at biometric systems, they raised awareness about how easily Deepfakes could be weaponized to manipulate public opinion and potentially breach security.

5. Fake Identity Verification: Hackers used Deepfakes to create counterfeit IDs that passed through automated facial recognition systems during online verification. These counterfeit IDs were used to set up fraudulent accounts, bypassing traditional security measures.

6. Security System Bypass: Researchers showed that Deepfakes could bypass physical security systems reliant on facial recognition in a space covered by security testing services. They successfully entered a secure facility using a Deepfake of an authorized individual.

7. Law Enforcement Concerns: Authorities have flagged cases where Deepfakes were suspected of identity fraud. Criminals could use Deepfake technology to outsmart surveillance systems or create false alarms, complicating investigations and undermining the reliability of facial recognition used in security.

Potential Consequences of Deepfake Biometrics Threats

Have a look at the potential consequences of Deepfake biometric authentication threats that are responsible for serious implications for identity security, financial integrity, and legal systems:

Identity Theft

AI Deepfakes often accurately mimic a person’s facial features, allowing cybercriminals to impersonate individuals effectively. Using these fake identities, attackers can bypass facial biometric systems, gaining unauthorized access to personal accounts and sensitive information.

Victims of identity theft may face severe repercussions, including loss of privacy, financial damages, and the time-consuming process of restoring their true identity.

Financial Fraud

Deepfakes pose a significant Deepfake facial risk to financial institutions that rely on facial biometrics for security. By creating convincing Deepfakes, attackers can trick systems into approving fraudulent transactions, leading to substantial financial losses.

Successful Deepfake attacks can erode trust in financial systems, making individuals and institutions wary of using facial recognition for identity verification. Hence, AI in fintech can help in this.

Unauthorized Access

Deepfakes can create realistic videos or images of authorized personnel, allowing attackers to access restricted areas. This breach can have serious implications, especially in sensitive locations like government buildings, research facilities, or corporate offices.

In high-stakes environments, unauthorized access facilitated by Deepfakes could compromise national security or lead to intellectual property theft.

Legal Issues

The use of Deepfakes in committing crimes creates legal challenges. Proving that an image or video is fake can be difficult, leading to potential complications in the judicial process.

If Deepfakes are used to create misleading evidence, they could influence legal outcomes, undermining the judicial system’s integrity and harming cybersecurity in software development.

Also read: Protecting Business In Digital Age With Cybersecurity In Software Development

Challenges of Detecting & Mitigating Deepfake Biometrics Threats

Organizations must understand the following challenges and take proactive steps to enhance their security measures and protection against the growing Deepfake biometrics threats.

Challenge 1: Sophistication of Deepfakes

AI Deepfakes are becoming increasingly sophisticated, with AI models capable of generating highly realistic fake images, videos, and audio.

This sophistication makes it difficult for traditional detection methods to distinguish between genuine and fake content, posing a significant challenge to security systems that rely on facial biometrics.

Even trained professionals need help identifying Deepfake AI biometrics threats, as the technology behind them evolves to mimic subtle facial expressions and movements, making detection even more challenging.

Challenge 2: Detection Tools

To combat Deepfake biometrics threats, organizations are developing AI-based detection tools that analyze media for inconsistencies. These tools use machine learning algorithms to spot anomalies in facial movements, lighting, and pixel patterns that may indicate a Deepfake.

However, as detection tools improve, so do the methods used to create Deepfakes. This creates a constant cat-and-mouse game where detection technology must continually evolve to keep up with the latest Deepfake advancements.

Challenge 3: Continuous Evolution

Due to the rapid evolution of Deepfake technology, new and more convincing Deepfakes are constantly emerging. As a result, organizations must stay vigilant and update their security protocols regularly to protect against these evolving threats.

Continuous research and development are essential to staying ahead of Deepfake technology. This includes improving detection tools and understanding the underlying AI techniques for creating Deepfake biometrics threats.

Integration with Other Security Measures To Mitigate Risks

Companies must consider integrating facial biometrics with other security measures, such as multi-factor authentication, to avoid the risks Deepfakes poses. This approach adds additional layers of security and makes it more difficult for Deepfakes to compromise systems.

Incorporating behavioral biometrics, such as voice recognition or typing patterns, further enhances security, providing additional verification methods less susceptible to Deepfake biometrics threats.

Explore our solutions to safeguard facial biometric authentication.

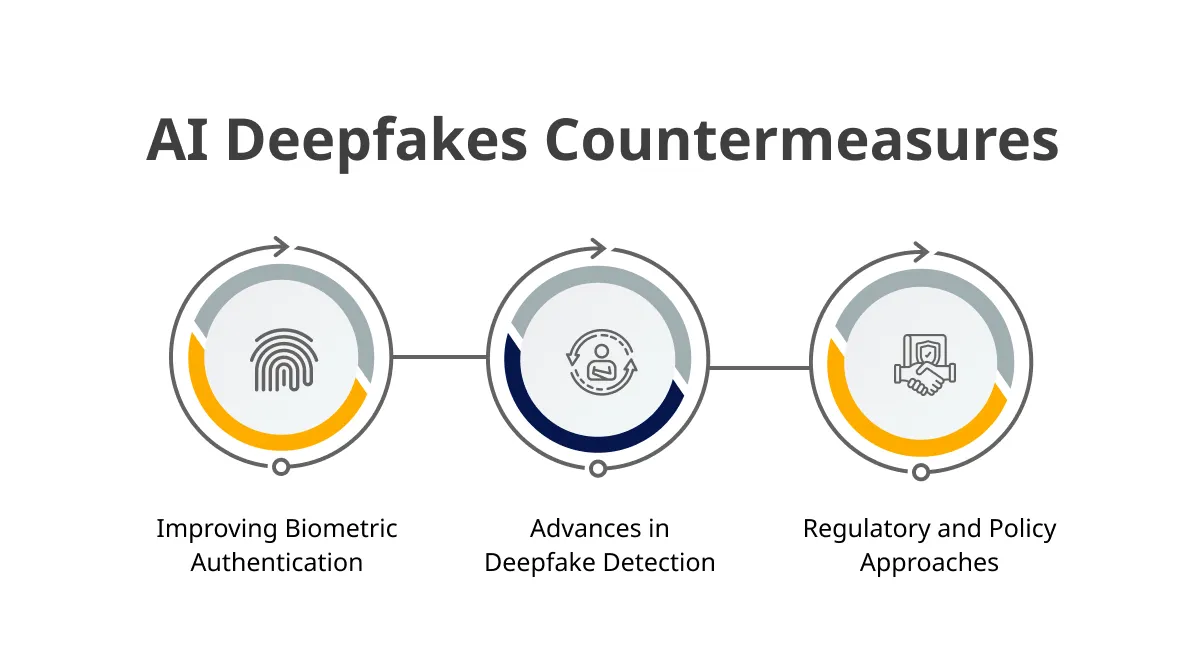

Countermeasures & Future Directions

Look at the following practical steps for improving the security of facial biometric systems, current efforts to detect the Deepfake biometrics threats, and the importance of regulatory measures in addressing the challenges Deepfakes pose.

Improving Biometric Authentication

To strengthen facial biometric systems against Deepfakes, several strategies can be employed:

- Multi-Factor Authentication: Combining facial recognition with additional verification methods, such as fingerprint scanning or passcodes, can add layers of security. This approach reduces the risk of a Deepfake alone compromising the system.

- Liveness Detection: Implementing advanced techniques help distinguish between a real person and a Deepfake. These techniques include analyzing subtle movements, detecting the absence of a 3D face, or assessing eye reflections/blinking patterns.

- Continuous Monitoring: Instead of relying solely on a one-time authentication check, monitoring throughout a session can help detect anomalies. If the system identifies suspicious activity, it can prompt re-authentication or flag the session for review.

Advances in Deepfake Detection

The fight against Deepfakes is an ongoing battle, with researchers and tech companies developing new tools to detect and mitigate these threats:

- AI and Machine Learning: Advanced algorithms are being trained to detect inconsistencies in Deepfakes, such as unnatural facial movements, irregular blinking patterns, or digital artifacts that human eyes might miss.

- Blockchain Technology: Some experts are exploring the use of blockchain to verify the authenticity of videos and images, creating a traceable and tamper-proof record of the media’s origin and alterations.

- Collaborative Databases: Collaborative efforts are underway to create extensive databases of known Deepfakes. These databases help improve the accuracy of detection tools by providing a wide range of examples for training and testing.

Regulatory and Policy Approaches

Addressing the threat of Deepfakes requires more than just technological solutions; it also demands strong regulatory frameworks and international cooperation:

- Setting Standards: Governments and industry bodies are working to establish standards for biometric systems that include requirements for Deepfake detection and resilience.

- Legal Measures: Legislation aimed at criminalizing the malicious use of Deepfakes is being considered or enacted in various countries. These laws aim to deter the creation and distribution of harmful Deepfakes using AI in legal.

- Global Cooperation: Since Deepfakes are a global issue, international cooperation is crucial. Cross-border collaboration on research, information sharing, and harmonizing regulations can help mitigate the risks associated with Deepfakes and protect biometric systems worldwide.

Also read: Why Is Machine Learning The Best For Fraud Prevention

Conclusion

So far, we have seen that Deepfakes’ potential to exploit vulnerabilities in facial biometric authentication systems has become increasingly evident. The technology we rely on for security could weaponize against us by producing Deepfake biometrics threats.

While advancements in AI can strengthen defense, they also raise the stakes in the battle between innovation and security. The question is no longer if Deepfakes will impact our digital safety but how quickly we can adapt to protect against this emerging threat.

Now is the perfect time to act against the Deepfake biometrics threat before the line between real and fake becomes indistinguishably blurred. Hence, connect with our experts at ValueCoders (India’s leading AI development company) to secure your future projects.

Established in 2004, we have successfully delivered 4,200+ projects to our happy clients, including Dubai Police, Spinny, Infosys, etc. Hire AI engineers from us today!