According to a 2023 Research and Markets report, the global big data and analytics market is expected to reach $662 billion by 2028, growing at a CAGR of 14.48%. This growing demand has taken the world to the widespread acceptance of data analytics tools, giving them faster and data-driven decision-making to enterprises.

As big data analytics firms depend more on strategic decisions on data insights, the demand for high-performance, scalable, and efficient processing solutions has increased. Apache Spark is at the forefront, helping businesses process massive amounts of information in real time.

What is Apache Spark?

Apache Spark stands as the premier open-source framework used for large-scale data. It uses in-memory caching and intelligent query optimization to deliver fast queries against datasets.

- Started as a research project at UC Berkeley in 2009.

- Currently used by 80% of Fortune 500 companies.

- Supports multiple programming languages, including Java, Scala, Python, and R.

- Versatile processing – Handles batch processing, real-time analytics, and machine learning.

- Scalable for businesses of all sizes – Works on both small and large-scale data workloads.

Use data-driven insights with our leading data analytics services company. We help you enhance decision-making and drive business growth.

The Evolution of Big Data Analytics

Traditional frameworks like Hadoop MapReduce suffered from high latency due to disk-based storage. Apache Spark overcomes these challenges through

- Using in-memory computing for 100x faster processing.

- Enabling real-time data analysis through micro-batching.

- Supporting advanced AI and machine learning solutions for deeper insights.

How Apache Spark Works for Big Data

Apache Spark follows a master-worker architecture that enables parallel and distributed data processing for high-speed analytics. It optimizes computation using in-memory processing and task scheduling through the following key components:

- Acts as the central coordinator, managing job execution and task scheduling across the cluster.

- Allocates computing resources and manages workload distribution across worker nodes.

- Perform assigned tasks, process data in parallel, and store results for further computation.

Spark’s Direct Acyclic Graphs- (DAG) based execution model and in-memory processing make it significantly faster and more efficient than traditional big data frameworks.

Also Read – Top 10 Big Data Analytics Firms in India

Core Features That Make Spark Powerful

Quick Processing

- Works up to 100 times faster than older systems

- Keeps frequently used data in memory for quick access

- Processes information in parallel across many computers

Grows With Your Needs

- Works well for both small businesses and large enterprises

- Runs on local computers or in the cloud

- Adds or removes processing power as needed

Handles Many Types of Work

- Processes large batches of data

- Analyzes information as it comes in

- Runs machine learning algorithms

- Handles complex graph calculations

- Works with SQL databases

Keeps Data Safe

- Automatically recovers lost information

- Maintains backup copies of essential data

- Continues working even if some computers fail

Hire remote Spark developers to integrate Spark’s advanced data processing into your business ecosystem.

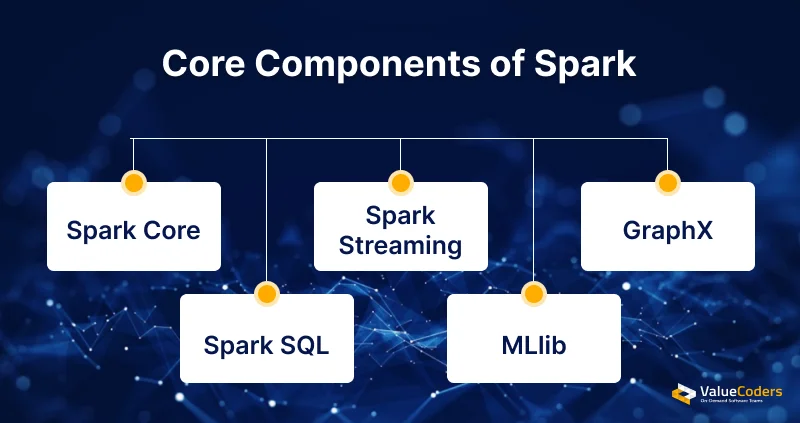

Key Components of Apache Spark

Powering Apache Spark for big data analytics strengthens modular architecture. Let’s explore the core components that power Spark’s capabilities.

Spark Core

Manages task scheduling, memory, and I/O operations efficiently.

- The backbone of distributed computing in Spark.

- Supports all other modules seamlessly.

Spark SQL

Provides SQL-based querying for structured data.

- Simplifies database and warehouse integration.

- Enhances data exploration for analysts.

Spark Streaming

Processes real-time data streams for instant insights.

- Used in fraud detection and live analytics.

- Enables quick decision-making from live data.

MLlib

Offers scalable machine learning algorithms.

- Supports classification, clustering, and regression tasks.

- Simplifies predictive model development.

GraphX

Analyze graph-based and network data effectively.

- Helps uncover insights from interconnected data.

- Useful for social networks and supply chain analysis.

Also Read – How Big Data Analytics In Retail Industry Is Transforming The World

Advantages of Using Apache Spark for Big Data

1. Faster Data Processing

Apache Spark’s in-memory computing accelerates data processing significantly.

- Processes data 100x faster than Hadoop for batch workloads.

- Enables parallel processing across distributed nodes efficiently.

2. Cost-Effectiveness in Cloud Environments

- Spark optimizes cloud resources to reduce operational costs effectively.

- Dynamically allocates resources based on workload demands.

- Integrates seamlessly with AWS, Azure, and Google Cloud platforms.

3. Real-Time Data Processing and AI Support

- Spark handles real-time data and supports machine learning solutions.

- Processes live data streams for fraud detection and analytics.

- MLlib library simplifies predictive modeling and clustering tasks.

4. Flexibility Across Multiple Programming Languages

Spark supports various languages, enhancing team adaptability.

- Works with Scala, Python, Java, and R for diverse workflows.

- Simplifies integration into existing systems and tools easily.

Hiring remote Spark developers to assist in multi-language implementations, ensuring flexibility, cost, and support.

Our Spark-powered solutions help you process live data streams for predictive analytics and AI automation.

Real-World Applications of Apache Spark

Financial Services: Advanced Fraud Prevention

Banks and financial institutions use Spark to detect fraud in real-time by analyzing millions of transactions simultaneously.

- The platform identifies suspicious patterns instantly

- Enabling security teams to block fraudulent activities

- Improve monitoring, reduce fraud detection

Healthcare: Data-Driven Patient Care

Healthcare providers leverage Spark to transform patient care through predictive analytics. The system processes patient data from electronic health records, monitoring devices, and clinical trials to:

- Predict patient deterioration 12-24 hours in advance

- Optimize resource allocation across hospital departments

- Identify high-risk patients for preventive intervention

- Reduce readmission rates through targeted care programs

eCommerce: Intelligent Customer Engagement

Leading online retailers implement Spark to power their recommendation engines. The platform processes customer behavior data to:

- Generate personalized product recommendations in real-time

- Analyze shopping patterns to optimize inventory

- Predict demand for seasonal products

- Improve conversion rates through targeted merchandising

Manufacturing: Predictive Maintenance Solutions

Manufacturing facilities use Spark to revolutionize their maintenance protocols. The system analyzes real-time sensor data from production equipment to:

- Predict equipment failures with 85% accuracy

- Reduce unplanned downtime by 30%

- Optimize maintenance schedules

- Lower maintenance costs by 25%

Telecommunications: Network Optimization

Telecom providers utilize Spark to enhance network performance and customer experience. The platform enables providers to:

- Monitor network quality in real-time

- Identify service degradation before it affects customers

- Optimize resource allocation during peak usage

- Reduce customer churn through proactive service improvement

Also Read – Applications of Big Data Marketing Analytics

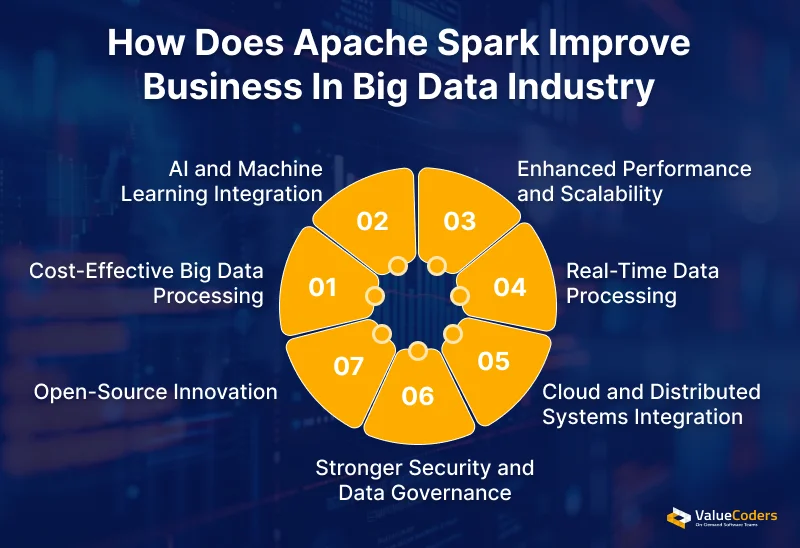

The Future of Apache Spark in Big Data Analytics

Given its speed and adaptability, along with its real-time processing abilities for large datasets, Apache Spark has become a popular choice among businesses and organizations

Here are the key trends shaping Apache Spark’s future in big data analytics:

1. Cost-Effective Big Data Processing

Apache Spark is making big data processing more affordable by optimizing hardware and cloud resource usage.

- Quickly connects with AWS as Azure and Google Cloud services.

- Effortlessly tailors resources to meet workload requirements effectively.

- Minimizes the requirement for equipment to maintain operations.

2. AI and Machine Learning Integration

- Apache Spark is becoming a key player in AI and machine learning, enabling smarter data-driven decisions.

- MLlib evolves with tools for predictive modeling and clustering tasks.

- Works well with TensorFlow, PyTorch, and other popular AI frameworks.

- Combines real-time processing with ML for faster, proactive decision-making.

3. Enhanced Performance and Scalability

Apache Spark is set to become faster and more scalable, solidifying its role in big data analytics.

- New optimizations speed up even the most complex query executions.

- Improves resource management for large-scale analytics workflows.

- Distributes tasks across clusters for smooth performance with massive datasets.

4. Real-Time Data Processing

Real-time data processing is transforming industries, and Apache Spark is leading this innovation.

- Spark Streaming processes live data streams quickly and accurately.

- Enables instant decisions in fraud detection and customer service sectors.

- Reduces delays by delivering actionable insights in near real-time.

Explore the latest trends and advancements in Apache Spark and its applications.

5. Cloud and Distributed Systems Integration

Apache Spark enhances cloud integration, supporting modern big data needs effectively.

- Ensures deep compatibility with AWS, Azure, and Google Cloud tools.

- Simplifies moving workloads to the cloud with minimal disruptions.

- Splits tasks across servers for faster processing of huge datasets.

6. Stronger Security and Data Governance

Apache Spark is stepping up to meet stricter data privacy laws and ensure secure operations.

- Protects sensitive data during transfer and storage with robust protocols.

- Adds role-based permissions and audit logs for enhanced security.

- Meets global regulations like GDPR, HIPAA, and CCPA for secure data handling.

7. Open-Source Innovation

Apache Spark’s open-source nature drives continuous growth and innovation in big data analytics.

- Developers worldwide contribute to rapid feature updates and improvements.

- Expands libraries and connectors for tailored big data solutions.

- Integrates smoothly with Hadoop, Kafka, and Flink for end-to-end pipelines.

With these advancements, Apache Spark is shaping the future of data engineering and paving the way for next-generation big data analytics.

ValueCoders helps utilize large data into powerful insight with Apache Spark.

Conclusion

As Apache Spark transforms big data analytics, the right expertise determines success. ValueCoders delivers this expertise through:

- Proven Apache Spark Implementation

- Cost-Effective Scalability

- End-to-End Data Strategy

- Industry-Specific Solutions

- Security-First Approach

ValueCoders combines technical mastery with business acumen to turn your data into a competitive advantage. Our big data consulting & management services transform complex challenges into measurable outcomes. Partner with ValueCoders to unleash the full potential of Apache Spark and your data.